Click here and press the right key for the next slide (or swipe left)

also ...

Press the left key to go backwards (or swipe right)

Press n to toggle whether notes are shown (or add '?notes' to the url before the #)

Press m or double tap to slide thumbnails (menu)

Press ? at any time to show the keyboard shortcuts

\def \ititle {Lecture 11}

\def \isubtitle {Joint Action}

\begin{center}

{\Large

\textbf{\ititle}: \isubtitle

}

\iemail %

\end{center}

\section{Aggregate Subjects: Recap}

In what ways might the notion of an aggregate subject help us to understand

which forms of shared agency underpin our social nature?

To answer this question, we first need to consider a more basic question:

How can aggregate subjects exist?

In a previous lecture we considered the suggestion (developed by Pettit and List among others) that

aggregate subjects arise as a conseuqence of individuals representing

them.

Why do should we consider other suggestions?

Which forms of shared agency underpin our social nature?

What distinguishes joint action from parallel but merely individual action?

In the first part of this course we saw that there are reasons to reject

both the Simple View and Bratman’s view (a counterexample, no less),

and significant challenges to extracting

an answer to these questions from Gilbert’s work.

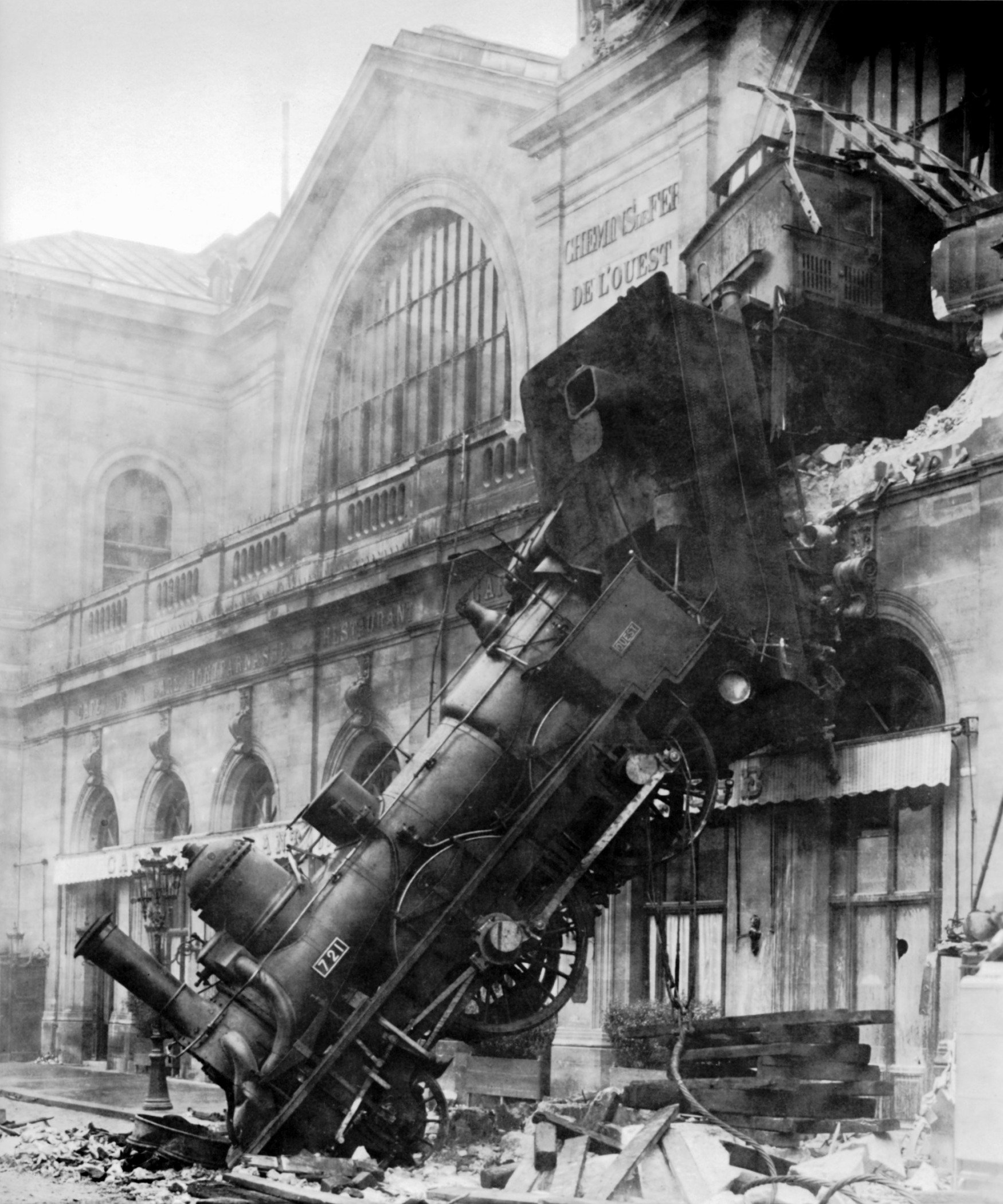

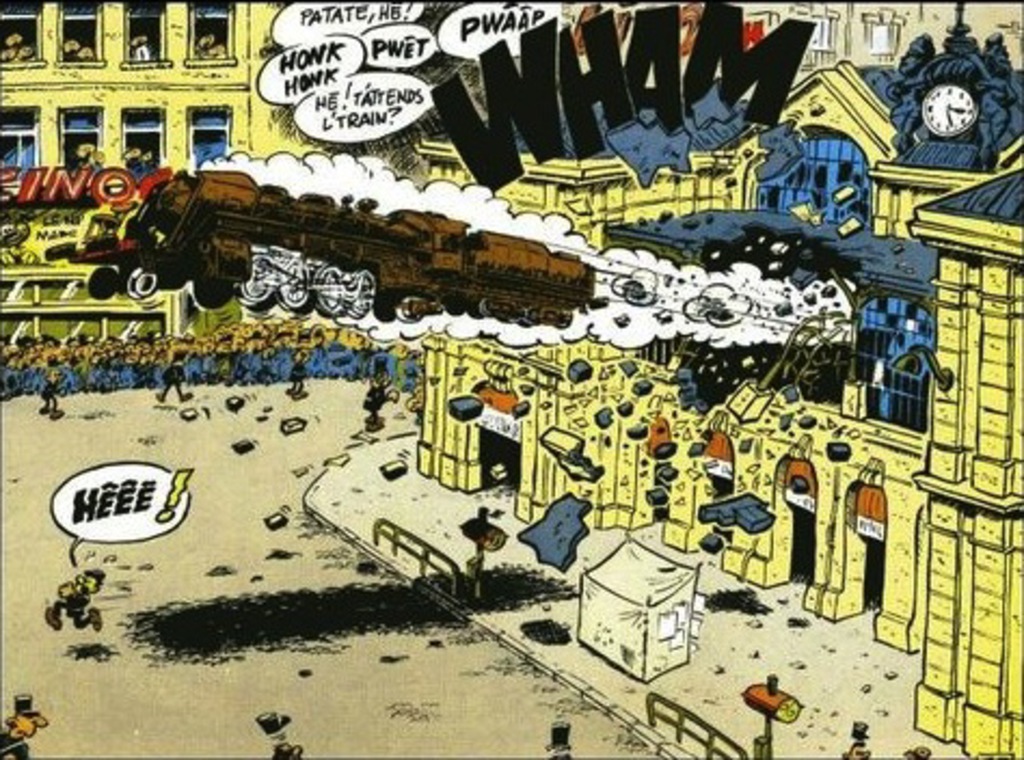

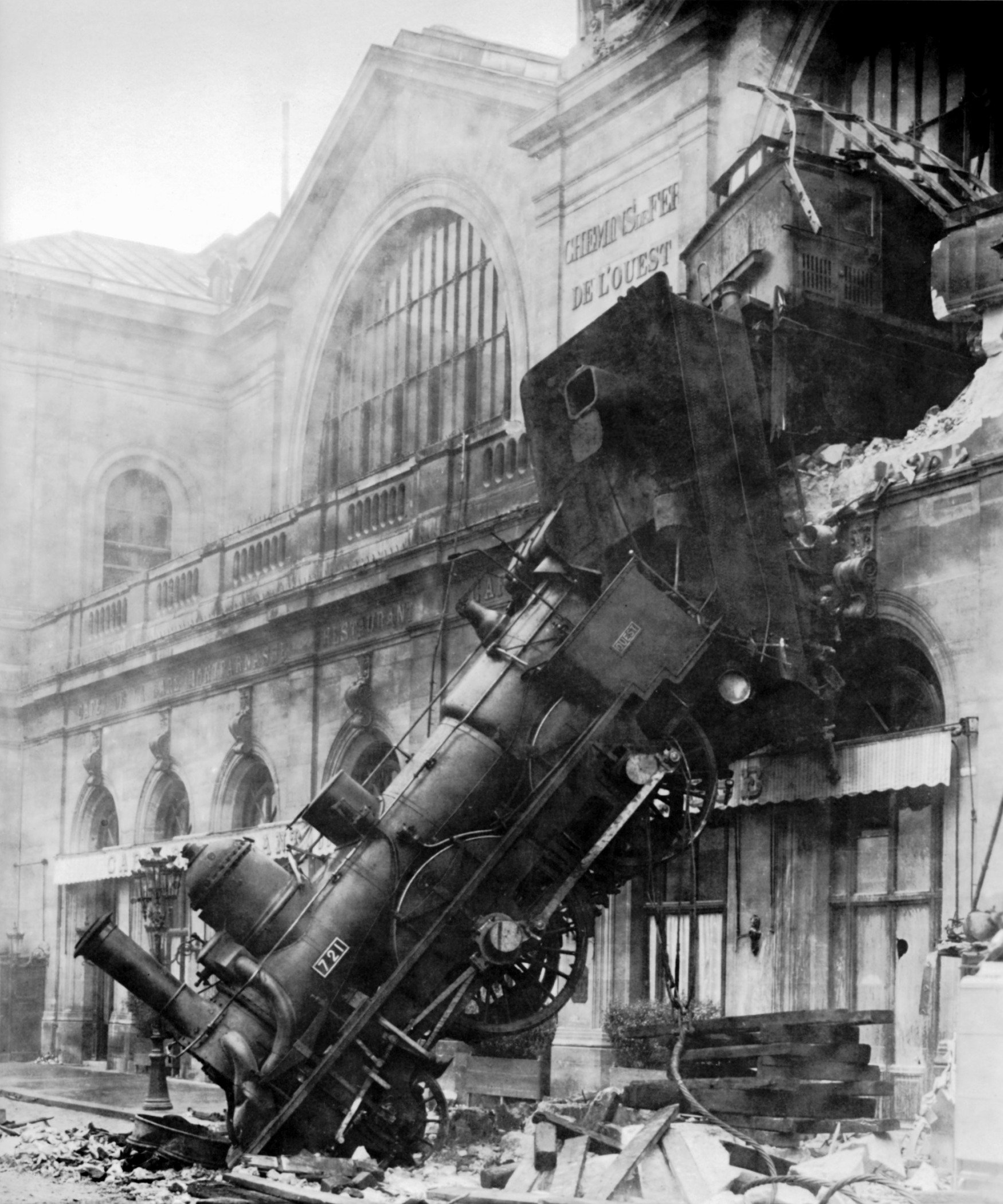

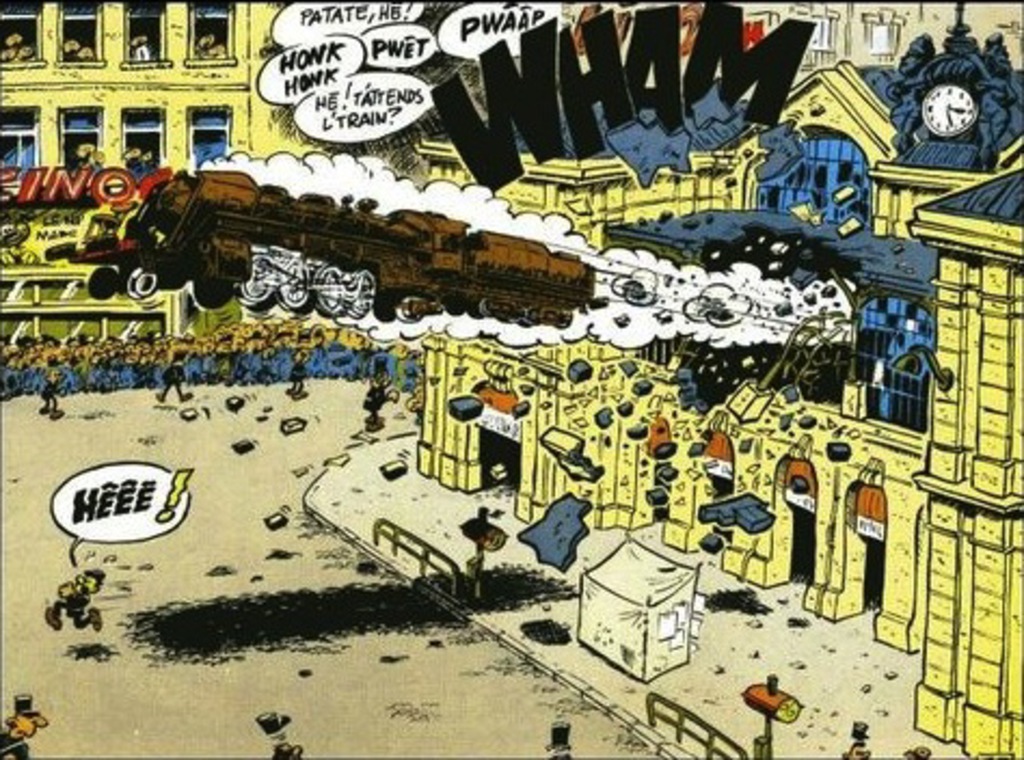

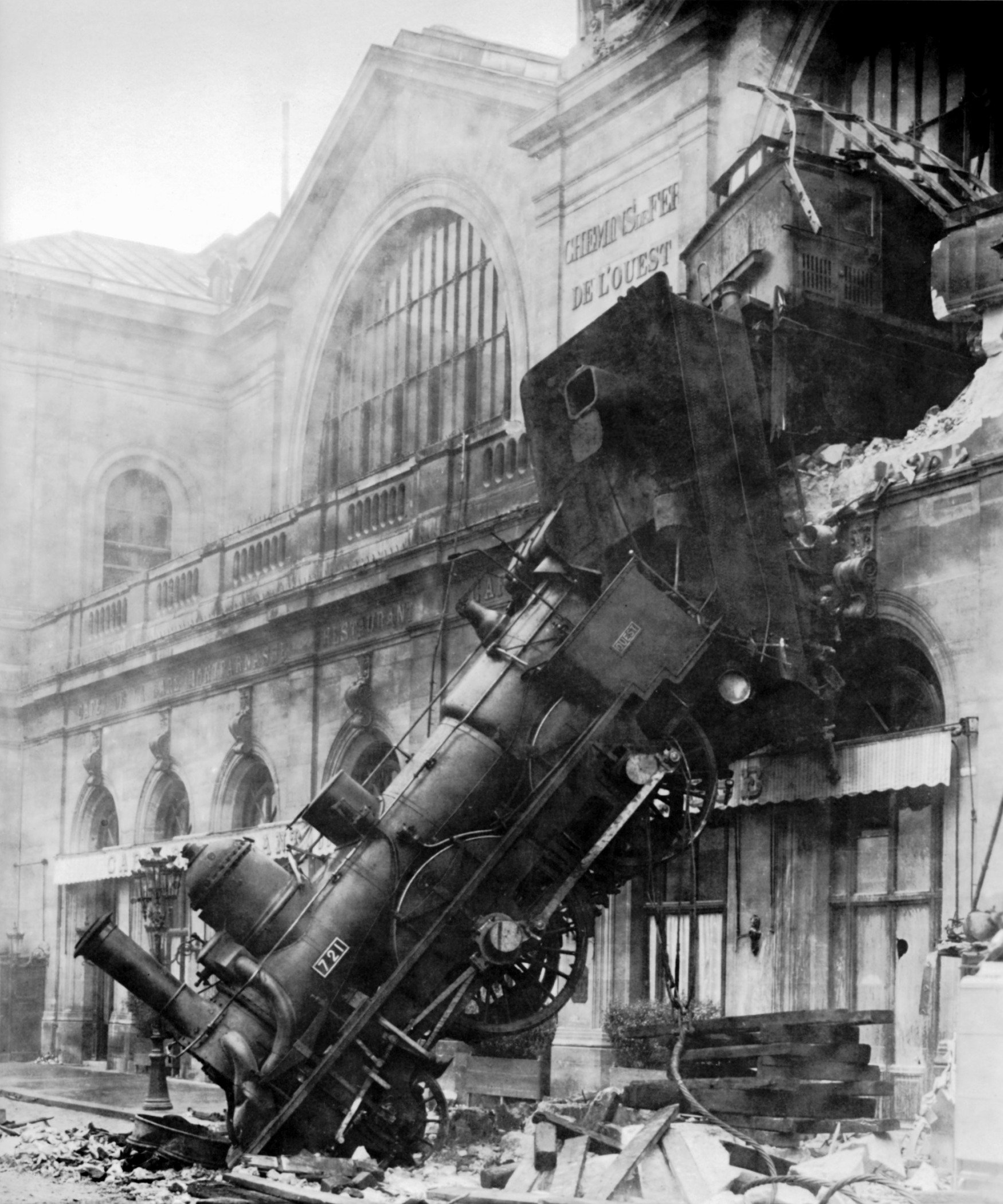

When we look at the leading accounts, it’s not much of an exaggeration

to talk about a train wreck.

Maybe we can get further by adopting a more radical approach.

aggregate subject

The more radical approach we are in the middle of exploring

hinges on aggregate subjects.

An aggregate subject is a subject with proper parts who are themselves

subjects.

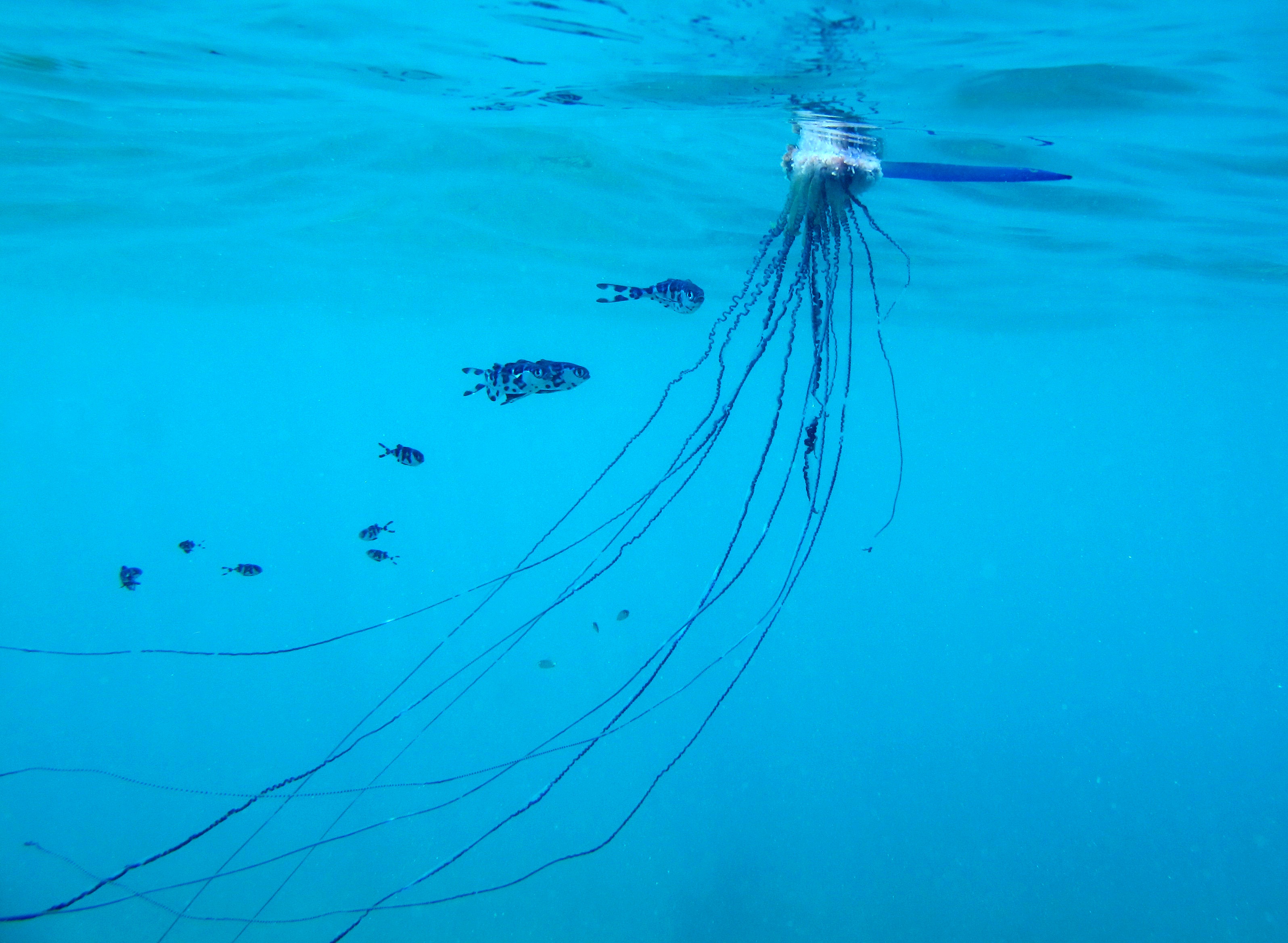

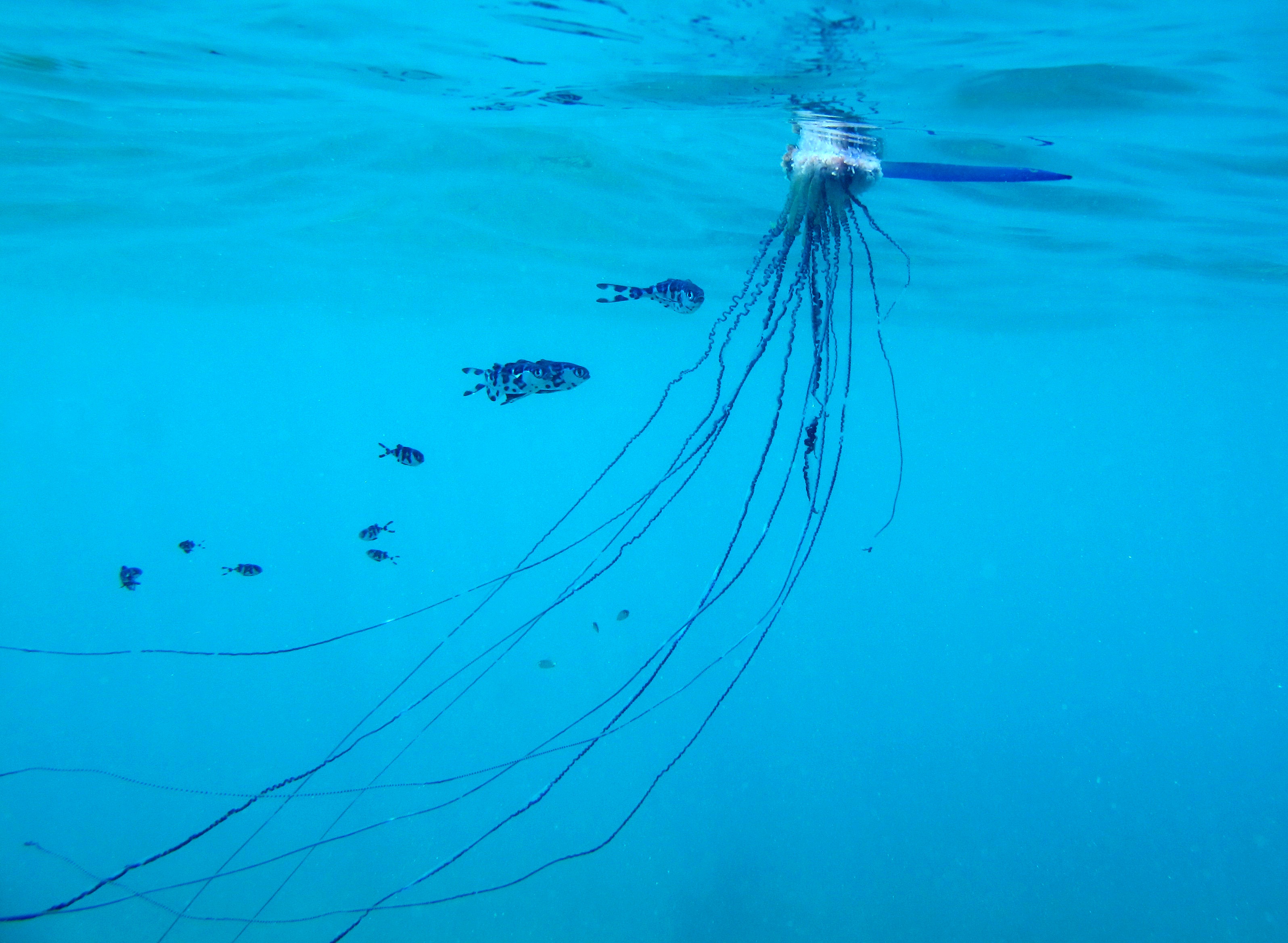

I compared aggregate subjects to an aggregate animal, the Portuguese man o' war (Physalia physalis),

which is composed of polyps.

Here you can say that ‘the group [of polyps] itself’ is engaged in action

which is not just a matter of the polyps all acting.

But how can such a thing exist?

Humans do not mechanically attach themselves in the way that the polyps

making up that jellyfish do.

So (as we saw in the last lecture) we have to ask, How are aggregate agents possible?

It was striking that many people took the view that such things just couldn’t exist;

just as Searle did.

One thing I aim to do in this lecture is to convince you that their existence is not

quite as strange as you might think.

But first I want to remind you of a couple of themes from the last lecture.

\section{Team Reasoning}

‘You and another person have to choose whether to click on A or B.

If you both click on A you will both receive £100, if you both click on B you will both receive £1,

and if you click on different letters you will receive nothing. What should you do?’

(Bacharach 2006, p. 35)

Team reasoning is a game-theoretic attempt to explain what makes your both choosing A rational.

But what is team reasoning? And how is team reasoning relevant to questions about joint action?

This unit provides the barest outline. The aim is not to explain, but to make you aware that

there’s a body of research in this area.

How to create an aggregate subject?

1. self-representation (just done)

2. team reasoning?

There is further, alternative motivation for considering team reasoning.

Some have claimed that it will provide us with a ground-level account of shared

intention, and thereby of shared agency ...

‘The key difference between the two kinds of intention is not a property of the intentions themselves, but of the modes of reasoning by which they are formed. Thus, an analysis which starts with the intention has already missed what is distinctively collective about it’

\citep{Gold:2007zd}

So these researchers, unlike Pettit and List are aiming to capture a basic

form of shared agency rather than to build on a prior account of shared intention.

But there’s more ...

‘collective intentions are the product of a distinctive mode of practical reasoning, team reasoning, in which agency is attributed to groups.’

\citep{Gold:2007zd}

So these researchers are aiming to build a kind of aggregate subject.

They think, in a nutshell, that aggregate subjects are not only a consequence

of self-reflection, but can also arise through (a special mode of) reasoning

about what to do.

Gold and Sugden (2006)

But what is team reasoning? I’m so glad you asked ...

‘somebody team reasons if she works out the best possible feasible

combination of actions for all the members of her team, then does

her part in it.’

\citep[p.~121]{Bacharach:2006fk}

Bacharach (2006, p. 121)

These are the questions you would want to answer if you were going to pursue team

reasoning. In this lecture series I decided there isn’t time to do that this year.

1. What is team reasoning?

2. In what sense does team reasoning give rise to aggregate agents?

3. How might team reasoning be used in constructing a theory of shared agency?

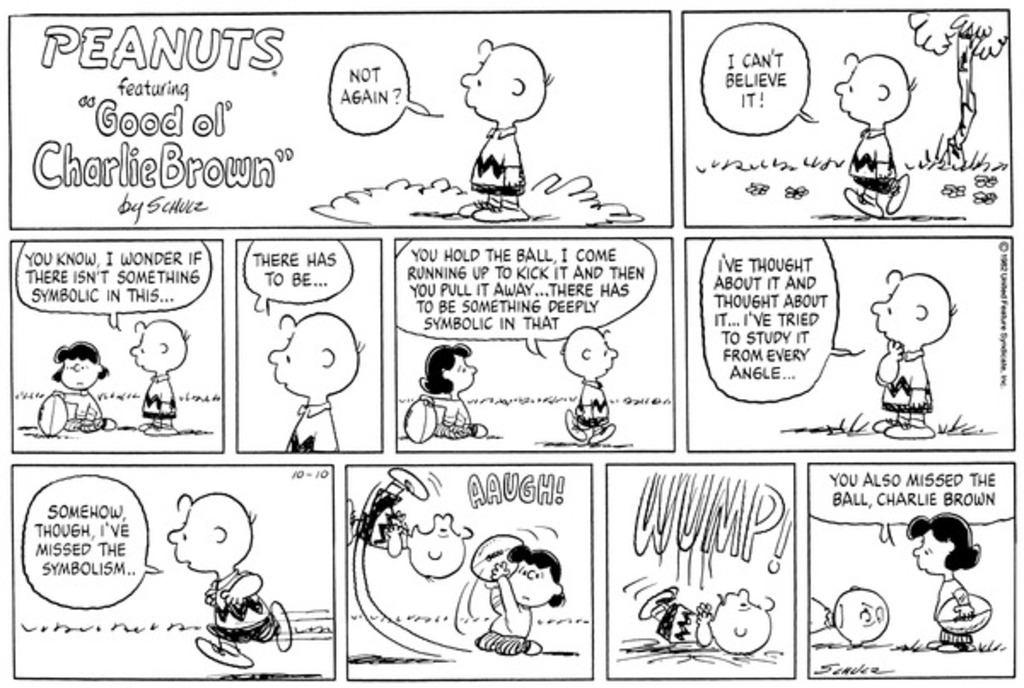

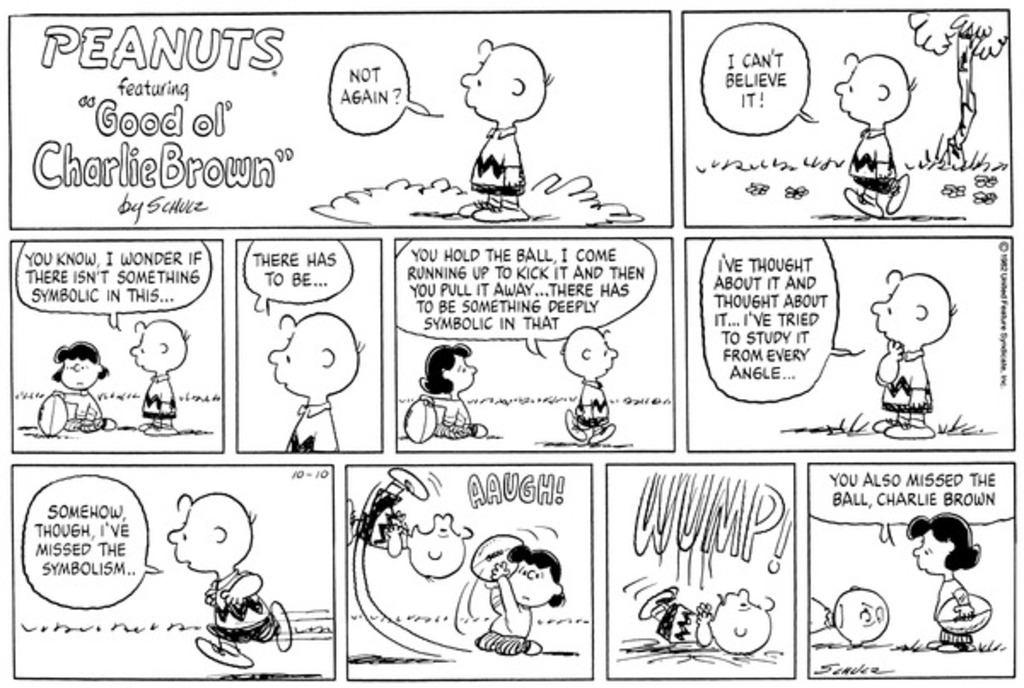

Schmid’s ‘Charlie Brown Phenomenon’

\section{Schmid’s ‘Charlie Brown Phenomenon’}

The the Charlie Brown phenomenon is

‘the possibility of being intentionally engaged in a joint action in a situation

in which overwhelming evidence to assume that the others will not do their part

is recognized to exist’ (Schmid 2013, p. 54).

What does the existence of this phenomenon suggest about shared agency?

‘participants in joint action are usually focused on whatever it is they are

jointly doing rather than on each other. Where joint action goes smoothly,

the participants are not thinking about the others anymore than they are

thinking about themselves’

\citep[p.~37]{Schmid:2013}

Schmid (2013, p. 37)

I don’t think this observation is an argument or an objection, but it

is suggestive.

In Pacherie’s account, we need beliefs about the others and their

team reasoning.

In Bratman’s account we need intentions about others’ intentions.

‘cooperators normatively expect their partners to cooperate; they do not predict their cooperation’

Dominant View: ‘the representation of the participation of the others has a mind-to-world direction of fit.’

Alternative View: ‘the representation of the participation of the others has a world-to-mind direction of fit.’

\citep[p.~38]{Schmid:2013}

Schmid (2013, p. 38)

Ok, this is just an assertion. What’s the argument for it ...

‘As his intention was to hit the ball rather than just to try to hit the ball,

Charlie’s intention either represents Lucy (cognitively) as doing her part

(holding the ball steady) or at least is incompatible with the belief that

Lucy will not hold the ball. Already in the 1950s, it becomes increasingly

clear that Lucy will pull the ball away. This evidence is further corroborated

over the following decades. Therefore, Charlie should not be optimistic. By

continuing to intend to kick the ball, it seems that Charlie violates the sufficient

reason condition. As he has reason to believe that Lucy will pull away the ball,

there is insufficient reason for optimism that he will be able to kick it.

‘Thus, in the view developed so far, and endorsed by such authors as

John Searle (2010), Raimo Tuomela, and Michael Bratman, there must be something

structurally wrong with Charlie’s intentionality; in his right mind, he cannot

intend to kick the ball. According to this line of analysis of joint action,

Charlie is simply unreasonable.’

BUT: ‘People think that Lucy rather than Charlie is at fault’ (Schmid 2013, p. 46)

Participants reason about what team-directed preferences require (so do not distinguish themselves from others).

‘participants in a joint action represent their partners as doing their parts in the same way as individual intentions implicitly represent the agent as continuing to be willing and able to perform the action until the intention’s conditions of satisfaction are reached’

‘individual agents of temporally extended actions “represent” their own future intentions and actions in the same way in which cooperators represent their partners’ intentions and actions.’

\citep[p.~49]{Schmid:2013}

Schmid (2013, p. 49)

How do cooperators represent their partners’ actions?

‘this representation is neither (purely) cognitive nor (purely) normative, but rather a very peculiar combination of the two. ’

\citep[p.~50]{Schmid:2013}

Compare representing your own actions:

‘An individual with a purely cognitive stance toward his own future self’s behavior and no normative expectation is a predictor of his behavior rather than an intender of his future action; similarly, an individual with a purely normative stance toward his own future behavior is a judge over [...] his future behavior rather than an agent.’

\citep[p.~50]{Schmid:2013}

Schmid (2013, p. 50)

I don’t want to go all the way with Schmid. In particular, I don’t quite

accept his suggestion that

‘A cooperator’s basic attitude toward his partner is such that he (implicitly) assumes that by representing the other as doing his part he makes it more likely that the other will in fact do his part because it provides the other with a motivating and a normative reason to do so.’ (p. 50)

How can we turn these observations into a theory?

Recall that we want a theory in order to be able to distinguish genuine joint

action from parallel but merely individual action.

PS: Are we still talking about aggregate agents?

Yes: from the point of view of the agents. (If Schmid is right, the basic

attitude I have towards your actions does not distinguish your actions from mine.)

\section{Interim Summary}

Our question is,

What distinguishes genuine joint actions from parallel but merely individual actions?

So far we have considered objections to some of the leading attempts to answer this question.

What has gone wrong?

Summary so far

What distinguishes genuine joint actions from parallel but merely individual actions?

The Simple View (but counterexample)

The shared intention strategy

--- Bratman (but counterexample)

--- Gilbert (but are there joint commitments?)

--- Ludwig, Pacherie, Searle, Tollefsen, ... (not covered)

The ‘aggregate subject’ strategy [nb plural vs aggregate]

--- reflectively constituted (Pettit and List)

--- emotionally constituted (Helm; not covered)

Btw, it seems to me that there’s an ethical issue.

If there are aggregate subjects, are we under obligations not to

end their lives? Or is the life of an aggregate subject somehow not as valuable as the life

of a non-aggregate one?

Team reasoning (not covered)

Diagnosis

Too reflective!

Grounded merely on intuitive contrasts!

I think our problems have at least two sources.